Bob Moog

Bob Moog talks to Clive Williamson of Symbiosis

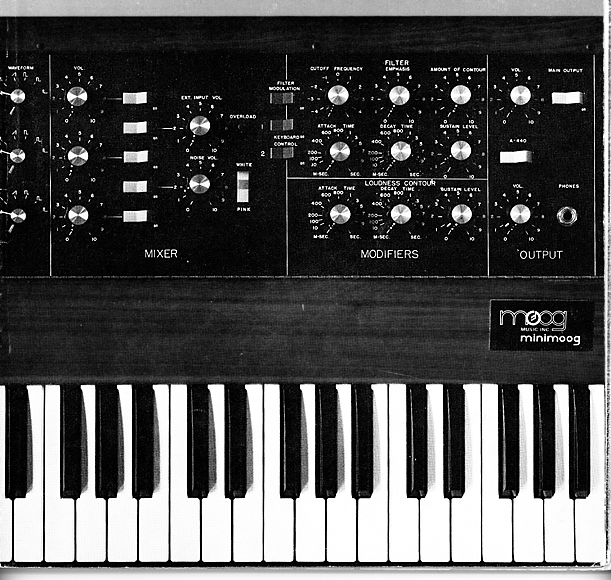

Bob Moog was responsible for some of the most inspiring electronic musical instruments ever… He developed a modular synth system which excited many musicians when it first appeared, and later designed the MINIMOOG, which was the first truly portable keyboard synthesizer intended for live use. An advert for the MINIMOOG in 1972 said, “It looks good, it feels good and, above all it makes musical sense; after all, it is supposed to be a MUSICAL instrument for the modern musician, not a box of electronic gadgetry for an engineer.” At that time the MINIMOOG cost £705. Bob Moog still had lots to say about music and synthesis when Clive Williamson recorded this interview with him in 1990.

You designed one of the most famous synthesizers – the MINIMOOG. What was the inspiration behind those early synthesiser designs of yours?

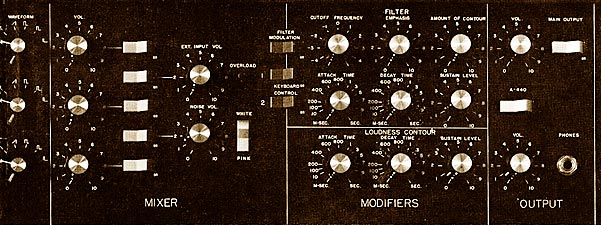

MINIMOOG was actually a second generation… MINIMOOG itself was inspired by a series of instruments that we designed more for experimental musicians where each separate module could do one thing and one thing only towards shaping or generating a sound. These early modular instruments looked more like telephone switchboards than musical instruments because you had lots of patch-chords that connected the parts together whereas the MINIMOOG was all pre-wired together. It was simple enough and quick enough to use so that you could use it on stage. The inspiration come for the first modular instruments that we made came from the experiments that the tape music and electronic music composers, had been making since the end of the second world war. These people used tape recorders to make their sounds, and they also used laboratory and broadcast test equipment to make test tones and to manipulate them in certain specific ways, with musical ends in mind.

What sort of people are you talking about when you mention those composers?

Well in Cologne, Germany, there was Karlheinz Stockhausen, perhaps the best known of that particular school. Also there was a gentleman by the name of Herbert Eimert, and in France, at the Paris radio station, there was Pierre Schaeffer and Pierre Henry, who instead of using electronic sound, preferred using natural sound but processed it electronically. In our country the best known composer was Vladimir Ussachevsky, who founded the Columbia Princetown Electronic Music Centre in New York City in 1959. All these people were experimenting – they were making a new kind of music. They weren’t sure how the music was going to turn out or what sounds or sound manipulations would be valuable, and as we look back at them today we can see the rapid evolution of a complete new medium of musical expression.

So what effect do you think your first instruments had on the composers that were then able to use them?

These early composers had to make do with what they could find from other scientific and technical fields. They would use war surplus equipment, laboratory equipment – anything at all that could generate or modify a sound electronically. What I did was more or less put it all in one box. I took everything that the musicians had found useful at that time, build it all in a neat form so that it all worked together without fighting and without having to worry about it. One sound source fitted into another instrument, so that it was just a more efficient tool.

This page sponsored by one of the UK’s leading producers of ambient music for relaxation:

Music Video for “The Beauty Within” by Symbiosis (from The Comfort Zone and Amber and Jade)

Find out about their gentle albums blending acoustic instruments and synth sounds here

Did you find that other sorts of people began to use those synthesizers?

Did you find that other sorts of people began to use those synthesizers?

Oh yes – our first customers were experimental musicians. In fact we sold some equipment to the BBC in the mid ’60s. Many of the musicians that worked with us at that time were at universities, some were choreographers who made their own dance forms and so on. Once these people began to understand how to use the instruments for making music, the people who made music for advertising commercials latched onto it because the synthesizer turned out to be the perfect way to bridge the gap between sound effects and music. You could make a sound that had some musical properties, but also suggested very explicitly some desirable emotion that was useful in commercials, and through that – at least in our country, a lot of synthesizer sounds were heard on the radio in the years say 1966-67. One of these people was Walter Carlos (now Wendy Carlos – Ed.) who then made the record “Switched on Bach”, a complete electronic rendition of the music of Bach and that became very popular, and brought synthesizer music to the third stage – the stage of its being used for popular records. Then the fourth stage I suppose was to be used live on stage, and that’s where the MINIMOOG came in. The MINIMOOG was very popular among rock musicians in the early 70s.

Now that instrument isn’t available any more, but it did have a relatively long life for an electronic instrument, didn’t it?

Yes, it was in production from 1970 through I think 1980 or ’81, which is a very long time for an electronic musical instrument to be in production. Today’s typical product life is as short as 6 months and if you’re lucky it’s 18 months to 2 years. The technology is changing very fast and the designs for instruments are changing fast along with it.

So how did your synthesisers work then, and what changes have come about since?

Our synthesizers took advantage of the technology that was just coming out in the late 60s. It was the technology of transistors, of what we call analogue circuits. An analogue circuit is a circuit that is actually a model of something that vibrates. It actually makes a sound waveform in an electronic form. Today’s instruments are digital, by and large. These instruments construct wave forms from a set of numbers, and because numbers are more precise than analogue circuits, you can make just about any kind of sound you want with today’s synthesizers. What you can’t do any more than you can do with analogue instruments is control them in the refined, complex ways that you can control traditional acoustic musical instruments.

You’re giving us indications there of the shortcomings in synthesizer design. What is your feeling about performance possibilities on synthesizers now?

There are synthesizers today that can make acoustical sounds – and when I say acoustical sounds I mean the sounds of traditional musical instruments – very accurately. So accurately in fact, that it’s hard to tell the difference. But what we musical instrument designers look forward to doing in the next few years, or few decades, is improving the way these instruments are controlled – the way they’re played – and the sorts of keyboards and controls that a musician interfaces with.

This page sponsored by one of the UK’s leading producers of ambient music for relaxation:

What exactly do you mean by “a musician interfacing”?

Well, it’s what happens in fact. Musical instruments provides the most efficient and refined interface between men and machine of anything we know. When a pianist sits down and does a virtuoso performance he is in a technical sense transmitting more information to a machine than any other human activity involving machinery allows. A musician may not be aware of transmitting information since he learns his technique by practising and he plays his music by intuition, but in fact that what’s happening! It happens really in the same sense that a typist can transmit information through a typewriter.

So what are the limitations of synthesizers now?

Most synthesizers have keyboards that were designed back in the days of electronic organs where you couldn’t vary sounds and there was no means of imparting expression. On the other hand when you play a violin or you play a piano you have many means of imparting expression into the sound – of changing the loudness of the sound and even the tone colour in very precise and musically meaningful ways. One big area of research these days is in developing new types of keyboards where the keys themselves are responsive not only to being turned down and off by being depressed, but also by changing the sounds through the motion and the force of the finger.

So do you always have to use a keyboard to play these synthesizers then?

No, a keyboard is just one example. One of the great things about synthesizers specifically and electronic music in general is that you can separate the means of control – that is how you play it – from the part that actually generates the sound. You can put any sort of control system that you can devise with any sort of sound that comes out. Some kinds that are available commercially now are what we call wind controllers. They’re shaped like saxophones or clarinets and you play them in much the same way as a wind instrument. As you blow into them, the electronic sound gets louder and softer. That gives an important means of expression. There are other controllers that are built like guitars and still others that are built like drum sets. In the experimental area there are controllers that are sensitive to the motion of the whole body so that they can be actually played by dancers. Now you can have a combination of dance performance and music production.

So with all this research work – and I gather you’re now involved in research – where do you think electronic instruments are going from here?

You know that’s very hard to tell. I think five or ten years ago nobody could foresee where it is now. For sure one thing that we see is that computers are playing a more and more important part in every phase of the production of music – not only professional music studios and on stage but also amateur musicians. A typical music studio for an amateur musician these days would have a multi-track tape recorder in it, a computer and a couple of synthesizers. That’s one very important direction and I’m encouraging that! I happen to think that computers are the most important thing to happen to musicians since the invention of cat-gut which was a long time ago. (Cat-gut of course is what violin strings are made out of.) Another very important area as I mentioned is the area of control devices where the somewhat more traditional technology of mechanical manufacturing is being combined with the current technology of computer sensors and computer processing to enable musicians to vary many properties of sound or many sounds just through the motions of their bodies.

(SOUND CLIP – hear Bob Moog on computers & cat gut!) (c) Symbiosis Music 2005.

So do you think we’ve achieved that yet, in 1990?

No I don’t think we’ve achieved it. The whole medium of electronic music is less than fifty years old and the whole technology of synthesizers about twenty-five years old now. I think we’re just beginning. Musical instruments typically take centuries to evolve into their final form. The piano after all was invented before l800. String instruments have evolved over several centuries and I think the same thing is true of electronic musical instruments.

What is your favourite form of synthesis now?

Oh, there is no one type of synthesis that will do everything. There are very few electronic musicians these days who confine themselves to just one type of synthesis. There’s one type of synthesis from sampling that gives you very accurate traditional instrumental tones, there’s another type of additive synthesis which does just the opposite – it enables you to evolve completely new sounds and it’s the ability to use these two together that excites a lot of musicians.

So is that the direction that we’re going to see future instruments moving in? A bending of sampled and synthesized sounds?

I think so, and I think still newer and more powerful synthesis methods will come into wider use as technology develops. The silicon chips – the computer chips – that are being made available to musicians today were not even known, say five or ten years ago, and these chips can do more and more. It’s very hard to tell what might be evolved five or ten years from today.

At IRCAM in Paris they use an enormous computer to investigate synthesis, which must have cost a fantastic amount of money when it was first introduced. Do you think that the power of something like that could be found in the home musician’s studio in the very near future?

Absolutely. What’s in the home musician’s studio now could not have been done at IRCAM or any other computer music centre anywhere in the world ten years ago. Things are moving that fast, and just recently I was talking to a scientist at Bell Telephone Laboratories in this country [the USA]. He was describing some work he was doing with the latest digital signal processing chips that Bell Labs and AT & T are just now introducing. He was talking about the implications for music and they were staggering, and their projection is that this sort of equipment will be available to the general public in a decade.

And what sort of processing power, or what sort of computer power are you talking about?

Well, now we’re getting a little bit technical. It’s computing power enough to analyze any sort of music in what we call ‘real time’. That is to actually complete the analysis as the music is happening with no perceptible delay, and then to process that and to reconstruct it in a musical way, so as to form a composition system where a musician can – just by the use of computer processing – evolve a piece of music near to something that’s already been written. Or from some rules, or some score that he generates himself. All that sounds very technical, and believe me it is – at this point. But by the time it reaches the general public and the musician public it will be reduced to a tool that we hope musicians will be able to use in a natural way, in an intuitive way, and in a creative way.

So how do you see the actual interaction with this tool by the musician?

Well, at the present time the interaction is through a computer screen – a computer workstation. It’s just a large computer screen with a keyboard. As time goes on this interaction interface will become more specialized to the needs of music, and I don’t think anybody knows what that is today, but we’re going to have fun trying, and finding out what sort of interface the musicians find most useful.

And is that something you’re going to be involved with, do you think?

I hope so. This is an area of my interest and it has been for the past twenty-five years and I expect to continue in it.

Can you imagine how the things are going to sound from this synthesis?

That depends on the musician. Right now there’s the capability of sounding exactly like a symphony orchestra or like some completely new set of sounds that I couldn’t even describe with words, or anything in between. All these things are possible today, the trick is putting them at the fingertips of a musician so he can put them together exactly the way he wants – and the big advance from here on will be what goes between the musician’s fingertips and the sound-producing capability – the synthesizer itself.

Presumably the musician’s understanding of all the equipment will be vital in all this?

Well that’s true. Musicians generally have to have some understanding of any instrument that they use – even the voice. A proficient singer has a greater understanding of how to use his or her voice than, say, I do. I have some understanding of how a piano works, at least enough for me to know how to hold my hands and what fingering to use. And I think the same thing is true of electronic musicians today. They need certain mental tools, certain intellectual equipment to deal with these new instruments.

Coming back to the home level of music production – what we’re seeing today is this incredible growth in home studios that you mentioned earlier. The sort of studio where you have a small four-track tape machine or something like that linked to a computer and some synthesizers, and people can actually make pop singles in their own homes. What are your feelings on that direction?

To the extent that more people now are being able to engage directly in making pop music I think the advances have a positive direction, shall we say. Everybody has certain reservations about how easy it is now – not to make really original good music – but to make pale imitations of music. Something that appears to be music, but is really just something mechanical or filled with gimmicks. One always has to remember these days where the garbage pail is, because it’s so easy to make sounds, and to put sounds together into something that appears to be music, but it’s just as hard as it always was to make good music. Making good music is an essentially human activity and it’s not easy, it takes talent.

(SOUND CLIP – hear Bob Moog on music needing talent!) (c) Symbiosis Music 2005.

Sponsored by Clive Williamson’s acclaimed UK ambient group SYMBIOSIS:

“A treat for Head, Heart and Ears!”

Hear music by Symbiosis inspired by Bob’s synthesizers: